Italian real estate

2. Detailed description

This page contains all the details on how this project was developed, from start to finish. The other parts of this project are:

Raw data extraction and datalake population

The extraction of the raw data is achieved by scraping immobiliare.it, and is performed by the webscraping script.

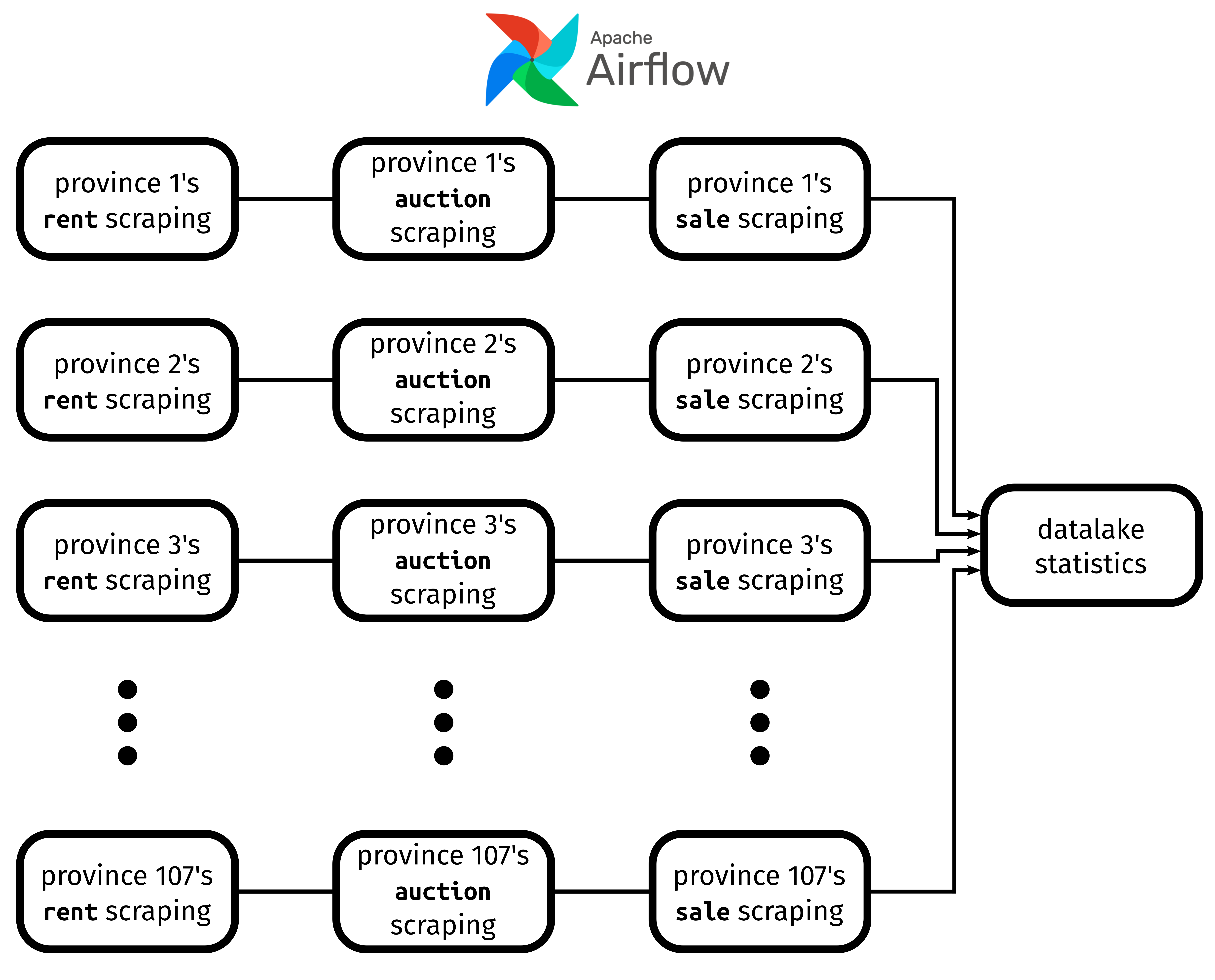

There are 107 provinces in Italy, and the website contains three different types of listings: sale, auction and rent. The scraping is orchestrated with Apache Airflow as follows: for each province, the script scrapes data for the rent listings first, then it scrapes auction listings and finally sale, processing each province in parallel. After fetching the raw data (i.e., the HTML source code of the listing’s webpage), the script loads it into a local instance of a MongoDB datalake.

In this data lake, each listing’s MongoDB document contains the following information:

- the listing’s unique ID (which is simply the same ID of the listing used on the website)

- the listing page’s HTML source code

- the name and two-letter code of the province where the listing is from

- the ID of the parent listing (if it exists)

- a list of the ID of the child listings (if they exist)

“Parent” and “child” listings are used, for example, when there are multiple houses on sale in the same building (and this case, the parent listing is a listing that shows info about the building, while the child listings are the individual listings of each unit on sale).

Here is a schematic representation of how Airflow orchestrates this part:

The raw data for this project was scraped once in January and once in February 2025.

Relevant data extraction and MongoDB data warehouse population

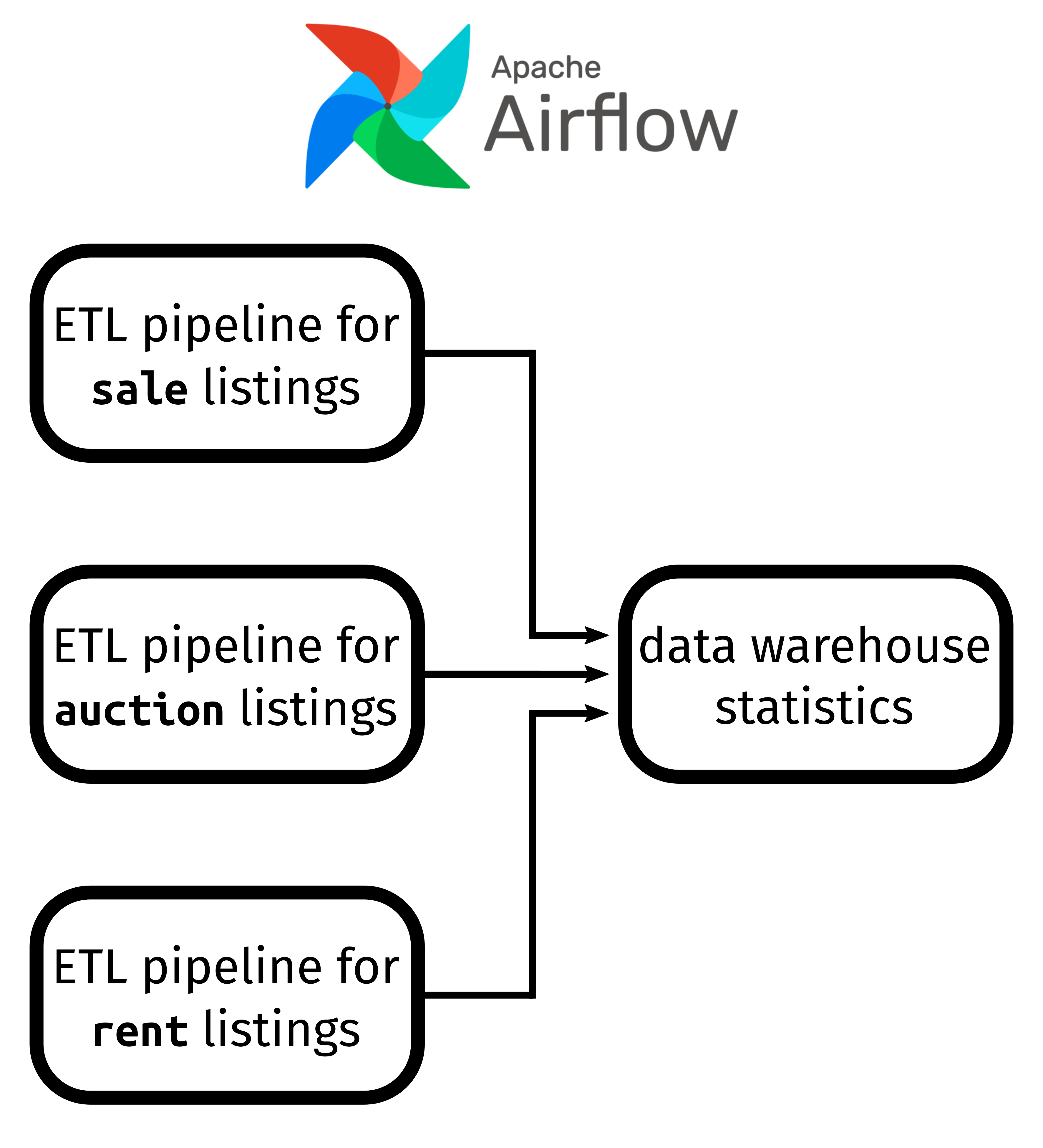

After the extraction of the raw data, the MongoDB migration script uses Airflow to extract only the relevant information from each listing, perform some basic transformation and load it in a non-relational data warehouse, hosted again as a local MongoDB instance.

These ETL pipelines are organized by listing type, i.e. Airflow executes in parallel the ETL pipeline of all rent, auction and sale listings in parallel before logging the statistics of the newly populated data warehouse:

In this data warehouse, each listing’s document contains the following information:

-

_id: the unique MongoDB ID for each document, taken from the listing’s ID on the website -

data: this field contains all the data relative to the listing. Since the same listing can be in principle scraped on different dates, this field is an array where each element is a dictionary containing the following information:-

scraping_date: date the listing was scraped -

listing_features: contains basic information on the listing:-

price: asking price forsalelistings, minimum offer forauctionlistings and monthly rent forrentlistings -

total_surface: property’s total surface, in squared meters -

price_per_sq_mt:price/total_surface -

listing_info: contains some of the listing’s metadata:-

listing_age: number of days since the creation of the listing -

listing_last_update: number of days since the listing was last modified -

is_listing_new:Trueif the listing has not been modified since its creation,Falseotherwise -

has_parent:Trueif the listing has a parent listing,Falseotherwise -

has_child:Trueif the listing has at least one child listing,Falseotherwise -

seller_type: what legal entity is selling/renting the property (e.g., private citizen, real estate agency, court etc.) -

number_of_photos: number of pictures in the listing

-

-

-

additional_costs: contains information about other potential additional costs:-

other_costs:-

condominium_expenses: if present, monthly condominium expenses (e.g., for elevator maintenance, gardening of common green areas etc.), in euros -

heating_expenses: yearly expenses for heating the property, in euros

-

-

mortgage_rate: average mortgage rate available from the website at the time of scraping

-

-

auction_info: forauctionlistings, this is a dictionary that contains information about the auction:-

auction_end_date: date when the auction will end -

deposit_modality: description of how the deposit for the property should be made -

auction_type: type of auction (e.g., with/without competitive bidding etc.) -

is_open:Trueif the auction is still open,Falseotherwise -

minimum_offer: auction’s minimum offer -

procedure_number: reference number for the auction’s legal procedure -

auction_court: name of the court responsible for this auction

-

-

cadastral_info: forauctionlistings, this is a dictionary containing cadastral information on the property:-

cadastral: cadastral reference number for the property -

cadastral_additional_info: if present, additional cadastral info -

sub_cadastral_info: if present, more additional cadastral info

-

-

surface_composition: if not empty, this field is an array containing dictionaries. Each dictionary contains information on the different parts of the property (i.e., the house, the backyard, the garage etc.):-

element: name of the current element (e.g., “house” or “garage”) -

floor: at what floor of the property is the element located -

surface: its surface in squared meters -

percentage: what percentage of the property the element makes up -

commercial_surface: the surface of the ENTIRE element (e.g., including walls) -

surface_type: this field can either be “principal” or “accessory”

-

-

availability: contains information on either if the property is immediately available or not -

type_of_property: this is a dictionary containing the following information:-

class: this contains information about the type of property (e.g., independent house or apartment) -

category: this fields indicates if the property is (for example) residential or commercial real estate -

is_luxury:Trueif the property is registered as luxury real estate (e.g., castles)

-

-

condition: condition of the property (e.g., “good”, “needs renovation” etc.) -

rooms_info: this is a dictionary that contains information on the property’s rooms:-

bathrooms_number: total number of bathrooms, ranging from 1 to 4 (where 4 means “more than 3”) -

bedrooms_number: total number of bedrooms -

total_room_number: total number of rooms in the property, ranging from 1 to 6 (where 5 means “more than 5”) -

rooms_description: concise textual description of the property’s rooms -

kitchen_status: type of kitchen (e.g., open kitchen, kitchenette or cooking corner) -

garage: if present, textual description of how many garages/parking spots are associated with the property -

property_floor: at what floor the property is located; 0 is ground floor

-

-

additional_features: this is a list of strings, each indicating a different feature that the listing has (e.g., fireplace, private garden, furniture, balcony etc.) -

building_info: this is a dictionary containing information about the building where the property is:-

has_elevator:Trueif the building has an elevator,Falseotherwise -

building_usage: what the building is used for (e.g., apartments, offices, factory, hotel etc.) -

building_year: year the building was built -

total_building_floors: how many floors there are in total -

total_number_of_residential_units: if the listing is of a residential building, this is the total number of units -

work_start_date: for new constructions, day the works started (or will start) -

work_end_date: for new constructions, day the works ended (or will end) -

work_completion: this is a number that measures how far the construction works are far along. It’ 0 onwork_start_date, 1 onwork_end_date; negative numbers mean that the works haven’t started yet, and numbers larger than 1 mean that the works have finished

-

-

energy_info: this is a dictionary containing information on the energy efficiency of the property:-

is_zero_energy_building:Trueif the property’s building is certified as being “zero energy” (i.e., extremely energy efficient) -

heating_type: type of heating (e.g., independent vs. centralized, radiators vs. fan coils, gas powered vs. solar powered etc.) -

energy_class: EU energy label for the property. The classification system goes from G (the least energy efficient) to A (the most energy efficient). Class A is also divided into four sub-classes, A1 to A4 -

energy_consumption: an estimate of the property’s energy consumption, in kWh/squared meter/year -

air_conditioning: if present, the type of air conditioning (e.g., hot/cold, only cold or only hot, autonomous vs. centralized etc.)

-

-

location_info: this is a dictionary containing information about the listing’s location:-

latitude: listing’s latitude -

longitude: listing’s longitude -

region: name of the Italian region where the listing is located -

province: name of the Italian province where the listing is located -

province_code: two-letter code of the listing’s province -

city: name of the city (or town) where the list is located -

macrozone: if present, name of the larger neighborhood where the listing is located -

microzone: if present, name o the area within the neighborhood where the listing is located -

locality: if present, name of the town or village where the property is located -

address: name of the street where the property is located -

street_number: name of the property’s street number

-

-

text_info: this is a dictionary containing all the textual information on the listing:-

title: listing’s title -

caption: listing’s caption -

description: full textual description of the listing

-

-

-

child_listings: in case of a parent listing, this field is an array with the IDs of the child listings, otherwise it’snull -

parent_listing: in case of a child listing, this is the ID of the parent listing -

province_name: name of the province from which the listing was scraped -

province_code: two-letter code of the province name

PostgreSQL data warehouse population

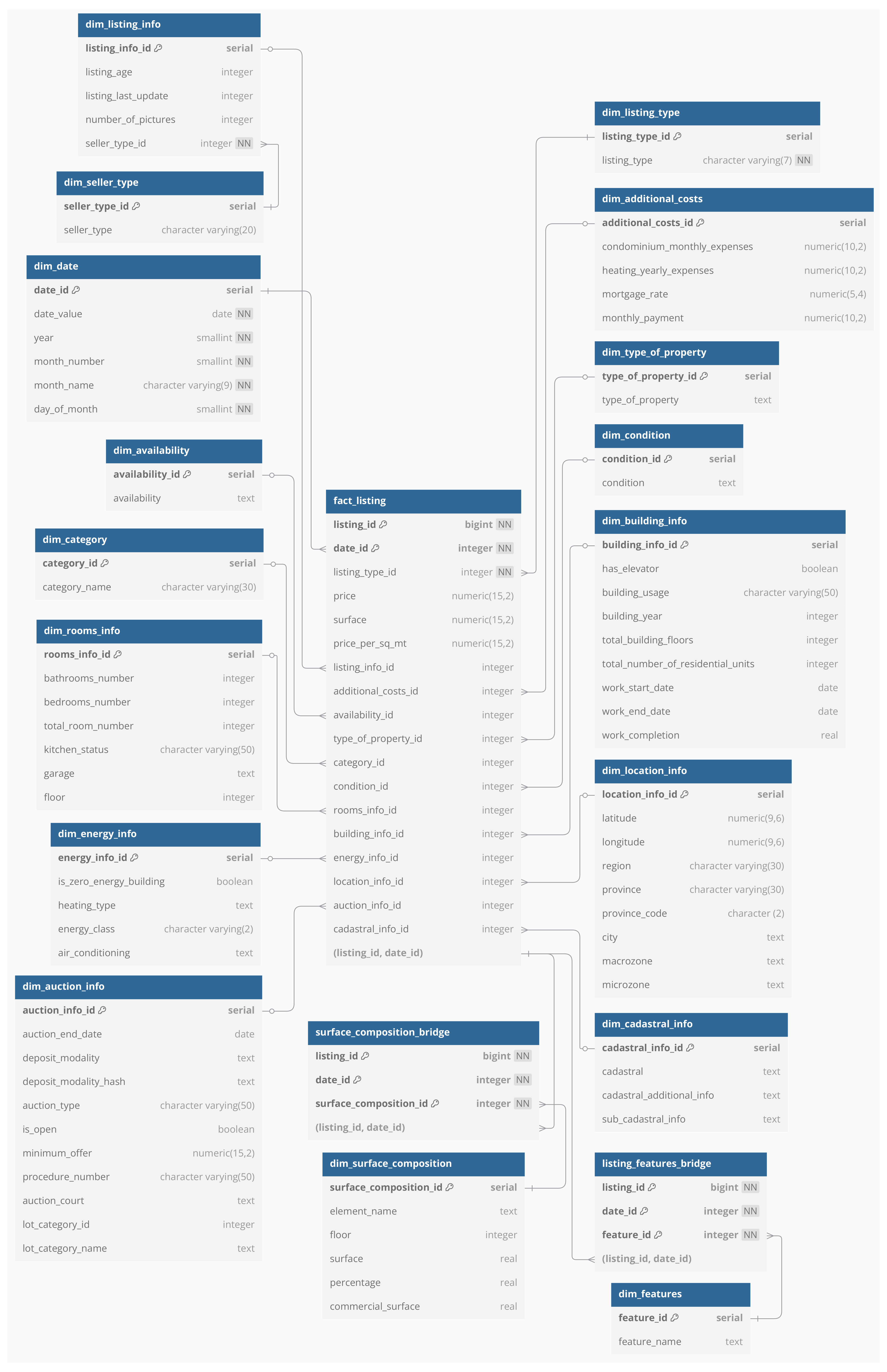

At this point the data warehouse was migrated into a relational database, hosted as a local PostgreSQL instance, with the MongoDB to PostgreSQL migration script.

This warehouse was build with a snowflake schema to ensure data consistency and reduce data redundancy. Here is a graphical representation (ERD) of the PostgreSQL data warehouse’s structure:

Several of the fields in this warehouse contain text or words in Italian. Therefore, this script also takes care of translating into English the fields that will be used in the remainder of the project. Translations are done automatically with a local instance of LibreTranslate, with some manual adjustments for real estate-specific jargon.

Since there are a lot of records in the database (~1 million), the script divides the data migration into batches of 10,000 records and uses dynamic task generation to achieve this.

Synthetic data generation

Once the PostgreSQL data warehouse has been populated and the useful fields have been translated into English, the synthetic data generation script extracts relevant information from the PostgreSQL warehouse and uses it to create a pandas dataframe, with some data transformations in the process:

-

listing_id: same as in the PostgreSQL warehouse -

listing_type: same as in the PostgreSQL warehouse -

price: same as in the PostgreSQL warehouse -

surface: same as in the PostgreSQL warehouse -

seller_type: same as in the PostgreSQL warehouse -

condominium_monthly_expenses: same as in the PostgreSQL warehouse -

heating_yearly_expenses: same as in the PostgreSQL warehouse -

type_of_property: same as in the PostgreSQL warehouse -

condition: same as in the PostgreSQL warehouse -

category_name: same as in the PostgreSQL warehouse -

total_room_number: same as in the PostgreSQL warehouse -

bathrooms_number: same as in the PostgreSQL warehouse -

kitchen_status: same as in the PostgreSQL warehouse -

garage:1if the property has at least one garage/parking spot,0otherwise -

floor: same as in the PostgreSQL warehouse -

has_elevator: same as in the PostgreSQL warehouse (with1forTrueand0forFalse) -

building_usage: same as in the PostgreSQL warehouse -

building_year: same as in the PostgreSQL warehouse -

total_building_floors: same as in the PostgreSQL warehouse -

is_zero_energy: same as in the PostgreSQL warehouse (with1forTrueand0forFalse) -

heating_type: same as in the PostgreSQL warehouse -

energy_class: same as in the PostgreSQL warehouse, but converted to numbers (fromG→10toB→5,A1→4, and so on untilA4→1) -

air_conditioning: same as in the PostgreSQL warehouse -

latitude: same as in the PostgreSQL warehouse -

longitude: same as in the PostgreSQL warehouse -

region: same as in the PostgreSQL warehouse -

province: same as in the PostgreSQL warehouse -

features: the dataframe then contains a series of one-hot encoded columns, one for each of the additional features that can potentially be found in a listing; if the column contains1then that listing has that particular feature, otherwise it contains0

This data is then used to generate synthetic data with a custom algorithm1 (based on the K Nearest Neighbors algorithm) that I developed. Here is a schematic description of how the algorithm works:

- For a given listing type (i.e.,

rent,auctionorsale), the algorithm randomly draws one of that type - The algorithm looks for the 5 nearest neighbors, where the distance metric is a weighted average of the distances of

price,surface,latitudeandlongitude. In other words, we look for 5 physically close listings that have similarpriceandsurfaceas the one selected - A new synthetic listing is created by taking the weighted average of the numerical features (e.g.,

price,surface,latitudeandlongitude) of the neighbors, while for each categorical feature we choose the most frequent among the neighbors - The generation of synthetic listings is repeated until desired

- A post-processing step makes sure that all the listing’s coordinates are within the borders of the Italian provinces they are supposed to belong

This algorithm, therefore, ensures that none of the synthetic listings contains exactly the same information as the original dataset, but the statistical properties of the data are left basically unchanged.

The synthetic data generation script also takes advantage of Tensorflow’s GPU acceleration

I used this algorithm to generate a number of synthetic listings of each type that resembles the number in the original dataset.

ML rent predictor model training

Once the synthetic data is ready, the rent predictor script takes care of the creation of a Machine Learning model to predict the cost of the rent for real estate listings. In other words, we extract the rent listings from the synthetic data to train a rent predictor model, with the idea to then apply it to auction and sale listings to determine the amount of monthly rent that can be earned after buying a particular property.

Since there are potentially many non-linear and non-trivial relationships between the listings’ features, I opted for a Random Forest Regressor model. All details can be found in the rent predictor script.

After training the model and making sure it’s doing an acceptable job, the value of the rent is predicted for all sale and auction listings, and the results are put in a dataset for visualization with Tableau.

Data visualization with dashboard

The final result of this project is a Tableau dashboard, which can be found here.

Footnotes

-

I have originally tried to train a generative model (CTGAN from the Synthetic Data Vault) to create the synthetic data. The task proved too difficult to tackle with the computational power I had available at the time I was working on this project (i.e., my own personal laptop): the CTGAN was only able to learn the distributions of all the listing’s features, but not their correlations. Therefore, critical information like the relationship between location and price was completely lost. For this reason I took a step back and developed my own algorithm as described here. ↩